First, you must allow the Linux server full rights and access to the Windows workgroup and local domain. That means that either in your Active Server environment you need to reserve an IP address for the Linux server, or you simply need to ensure a hard coded IP address is given to the Linux server. The server must have a reserved IP address whether you deliver it via DHCP or statically assign it in the DNS. This also means that you need to ALLOW SMB DAEMON to operate. You can ensure your system security does this by editing your Firewall setup or using system-config-securitylevel .

Second, you must ensure that SAMBA (SAMBA tools and the SMB protocol) is installed and running on the Linux server. Most versions of Fedora come with the necessary packages. You should also be sure to update your SAMBA security levels properly if you are having trouble accessing a shared directory. This is found under Samba Server Configuration tool, main menu Properties, option Server Settings, and then the tab: Security.

Now you are ready to configure your Fedora Linux/SAMBA machine... aka. SAMBA Server.

STEP 1: Enable Network Connectivity to the SAMBA Server

Using the Fedora Network Configuration tool you will need to ensure that the ethernet card is enabled and properly functioning. Get quick access to the tool through this command: system-config-network

Once in the Network Configuration tool, you should ensure that your ethernet device is enabled. If it is not, select the eth device and then click on the Edit button. This will allow you to input the vital network adapter settings including: statically set IP address, subnet mask, and gateway. You should also select the top checkbox labeled Activate device when computer starts. Read this article for more details regarding configuring a network card in Fedora, Mandrake, or SuSE.

Close and save any changes you've made. The main goal is to ensure you have an ACTIVE and functioning network card on the SAMBA server.

Restart the network services or simply reboot your SAMBA server. Now try a ping to the server from another PC on the same subnet. At a command prompt, for example, type: ping 10.2.2.3

The ping should come back good validating your network connection. If you need more Linux system administration help read my Admin Commands List.

STEP 2: Update Firewall Settings

In most cases the default Firewall setting on the SAMBA server locks out any inbound network requests. I've had a great many people come running to me about this issue. If you're setting up a basic SAMBA server within your business intranet, allow your ethernet connection to be a trusted device so others can get to your SAMBA server and not be bounced by the server's Firewall.

NOTICE: if you plan to use the SAMBA server outside of your business firewall/intranet you should NOT follow the next step. Instead you allow your local server to receive packets by making changes to your IPTABLES, such as:

iptables -A INPUT -s 192.168.0.0/255.255.255.0 -j ACCEPT

The following step is for those using an intranet business server configuration.

Okay, now to allow your intranet based SAMBA server to properly accept incoming requests, from your Main menu choose System Settings, then Security Level. You can access this also by typing the command:

system-config-securitylevel

Please select the box next to the ethernet card you are using for intranet connectivity so that it becomes a TRUSTED DEVICE. Otherwise you have a super secure server that bounces inbound requests. Notice, this selection effects all the items in the Services listing above it, so please be careful in what context you allow a trusted device!

Press OK when finished.

STEP 3: Enable SMB Services

Ironically, the SMB daemon and other core services are usually NOT started by default. You will need to change this so that your SMB daemon is now started.

Using the GUI from the main menu, go to System Settings, then Server Settings, then choose Services. You can also get to this using the command: system-config-services

While you're looking over this long list of services, please DISABLE things you know for sure you do not need to run on this SAMBA server. For instance apmd, isdn, etc. But also ensure that key services such as SMB are selected and RUNNING. Select SMB and press the Start button. If it is supposedly already running you can press the Restart button to be sure it is indeed running correctly now.

Now press the Save button to make sure the configuration changes have been saved for future restarts.

Sometimes using the GUI just does not properly restart the SMB daemon. In such odd cases, I want to suggest you force a manual restart from the command line with this command: /etc/rc.d/init.d/smb restart

If you keep having startup failures, where for every reboot you need to perform Step 3, you may need to manually configure your start up processes so that SMB will always be in the init.d bootup. Okay, if that sounded ridiculously confusing then how about just going to my article on manually starting services and configurations.

STEP 4: Create Server Users & Directories

You will need to ensure that people also have a login to the SAMBA server to do their work. Logins should be provided on an as needed basis. Obviously, in most cases the users accessing the SAMBA server will be a subset of the total users on the Windows business network.

Create user logins with the Gnome User Manager tool in Fedora. You can find this from the main menu by choosing System Settings, then Users & Groups. The command for this is: system-config-users

Notice this is the first step in creating SAMBA users, which comes later.

Add as many users as you need and then move on to the next part, which is creating directories (aka. folders) for use.

This is such an obvious step most people usually forget to think about it before hand. However, it is very helpful to think ahead what directories you will allow access to on the SAMBA Server for business use. In my case the people needing SAMBA server access will be updating webpages. Therefore, I do not need to add any other folders for file sharing or group interaction. Be sure you add any folders in a reasonable and ordered fashion.

A big TIP for those who are not aware, the permissions settings for your folders are very important. If you have no idea how to change permissions, then you may want to review this article on Linux filemanagement and permissions.

STEP 5: Configure the SAMBA Server

It's time to configure your SAMBA server to allow others on the intranet to login and use the server from Windows or Linux PCs.

From the main Fedora menu, choose System Settings, then Server Settings, then Samba. You can also get to this tool by typing the command: system-config-samba

You are about to make changes to the SAMBA Configuration file called smb.conf. This file is found under /etc/samba. If you encounter issues you may want to first start by using my example smb.conf file and then make the changes below. I also want you to be aware that you can edit configuration files with the web interface tool called Samba Web Administration Tool(SWAT) and several others. Now lets move ahead using the Configuration tool using the preloaded Fedora tools. NOTICE that many people begin by tinkering with their .conf file... this is NOT a good idea. First ensure that the basic samba connectivity works and THEN you can tinker with the smb.conf! (see troubleshooting below)

A. Begin by Making Changes to the Server Settings

Under the Preference menu item choose Server Settings...

Be sure to include the Windows workgroup name. In the example above the workgroup has been changed to net. Your situation may be different. In many cases naming the workgroup simply workgroup is fine, so long as your Windows PCs connect to this same name.

Under this same window, click on the Security tab. It comes by default with the appropriate settings for a basic SAMBA Server. The Authentication mode should be User. You would need to change this only if you plan to allow logins based on the Microsoft ADS.

Press OK to finish making basic changes to the server.

B. Select SAMBA Users

Under the Preference menu item choose Samba Users

In this window you must Add at least one user who will have access to the SAMBA Server. Notice that only user accounts you created in step 4 should be added to this listing.

Press the Add User button, then from the pull down select a user. Fill out the additional information needed for this SAMBA user. Press OK when finished.

C. Adding A Shared Folder

Under the SAMBA Server Configuration window, you must create at least one SAMBA share directory.

Press the Add button and then the Browse button. Now choose a folder you wish to make available to SAMBA users. Be careful, some folders have permissions settings that do not allow sharing. Now be sure to select the Read/Write option to allow people full access. Don't press OK yet!

You should see your shared folder appear under the listing as shown in the example above.

D. Adding Users

In the same window, select the second tab labeled Access. From here choose the first option labeled Only allow access to specific users and select the users you wish to give access to this specific SAMBA shared folder. Press OK when finished.

You can repeat steps C and D for each new shared folder.

Once completed, please choose File from the menu then choose Quit.

Hopefully this saved all of your settings properly. If you encounter issues with the graphic SAMBA configuration tool, such as it failing to accept your changes, then please read the Troubleshooting Tip 4 located below.

STEP 6: Restart the SAMBA Services

Now you need to restart all SAMBA services. You can use the process found in Step 3, except press the Restart button or use the word restart instead of the word start.

I mentioned earlier that sometimes your changes do not get properly picked up. I've installed so many different Fedora SAMBA configurations that I can't recall every reason. This may be a very good time to simply reboot the LINUX/SAMBA Server. Rebooting will ensure everything gets properly started up and all of the configuration changes are included. More importantly, this is likely the last time you will ever restart your SAMBA server again. Some of my FEDORA servers haven't been restarted in years.

STEP 7: Access the SAMBA Server from Windows

You're now ready to fully utilize your new intranet SAMBA Server for work. On any Windows PC you can access the server by simply going to the main Start menu, choosing Run and typing in the hostname of your SAMBA server. For example: \\linuxserver

Please notice that in the Windows environment you use different slashes and you need to ensure this syntax.

If this does not work, perhaps if the server is not yet included in your DNS, try accessing the SAMBA Server through its IP address: \\10.2.2.3

Obviously you need to use an actual hostname or IP address and not my example.

If all works well you should instantly see a SERVER LOGIN window. Now login using a SAMBA created username.

You should then instantly see the shared folder as well as the individual user's personal folder that exist on the SAMBA Server.

Congratulations, you're done.

Wednesday, December 9, 2009

Thursday, December 3, 2009

Creating a LVM in Linux

To create a LVM, we follow a three step process.

Step One : We need to select the physical storage resources that are going to be used for LVM. Typically, these are standard partitions but can also be Linux software RAID volumes that we've created. In LVM terminology, these storage resources are called "physical volumes" (eg: /dev/hda1, /dev/hda2 ... etc).

Our first step in setting up LVM involves properly initializing these partitions so that they can be recognized by the LVM system. This involves setting the correct partition type (usually using the fdisk command, and entering the type of partition as 'Linux LVM' - 0x8e ) if we're adding a physical partition; and then running the pvcreate command.

# pvcreate /dev/hda1 /dev/hda2 /dev/hda3

# pvscan

The above step creates a physical volume from 3 partitions which I want to initialize for inclusion in a volume group.

Step Two : Creating a volume group. You can think of a volume group as a pool of storage that consists of one or more physical volumes. While LVM is running, we can add physical volumes to the volume group or even remove them.

First initialize the /etc/lvmtab and /etc/lvmtab.d files by running the following command:

# vgscan

Now you can create a volume group and assign one or more physical volumes to the volume group.

# vgcreate my_vol_grp /dev/hda1 /dev/hda2

Behind the scenes, the LVM system allocates storage in equal-sized "chunks", called extents. We can specify the particular extent size to use at volume group creation time. The size of an extent defaults to 4Mb, which is perfect for most uses.You can use the -s flag to change the size of the extent. The extent affects the minimum size of changes which can be made to a logical volume in the volume group, and the maximum size of logical and physical volumes in the volume group. A logical volume can contain at most 65534 extents, so the default extent size (4 MB) limits the volume to about 256 GB; a size of 1 TB would require extents of atleast 16 MB. So to accomodate a 1 TB size, the above command can be rewriten as :

# vgcreate -s 16M my_vol_grp /dev/hda1 /dev/hda2

You can check the result of your work at this stage by entering the command:

# vgdisplay

This command displays the total physical extends in a volume group, size of each extent, the allocated size and so on.

Step Three : This step involves the creation of one or more "logical volumes" using our volume group storage pool. The logical volumes are created from volume groups, and may have arbitary names. The size of the new volume may be requested in either extents (-l switch) or in KB, MB, GB or TB ( -L switch) rounding up to whole extents.

# lvcreate -l 50 -n my_logical_vol my_vol_grp

The above command allocates 50 extents of space in my_vol_grp to the newly created my_logical_vol. The -n switch specifies the name of the logical volume we are creating.

Now you can check if you got the desired results by using the command :

# lvdisplay

which shows the information of your newly created logical volume.

Once a logical volume is created, we can go ahead and put a filesystem on it, mount it, and start using the volume to store our files. For creating a filesystem, we do the following:

# mke2fs -j /dev/my_vol_grp/my_logical_vol

The -j signifies journaling support for the ext3 filesystem we are creating.

Mount the newly created file system :

# mount /dev/my_vol_grp/my_logical_vol /data

Also do not forget to append the corresponding line in the /etc/fstab file:

#File: /etc/fstab

/dev/my_vol_grp/my_logical_vol /data ext3 defaults 0 0

Resizing Logical Volumes

This is a continuation of my earlier post Creating Logical Volumes in Linux . Here I will explain how to resize an existing logical volume. Logical volumes may be resized dynamically while preserving the data on the volume. Here is how:

Reducing a logical volume

Reduce the filesystem residing on the logical volume.

Reduce the logical volume.

For different file systems, it is achieved differently.

For ext2 file system

If you are using LVM 1, then both the above steps could be acomplished by executing a single utility called e2fsadm.

# umount /data# e2fsadm -L -1G /dev/my_vol_grp/my_logical_vol# mount /dataThe above command first reduces the filesystem in the 'my_logical_vol' by 1 GB and then reduces the my_logical_vol itself by the same amount.

If you are using LVM 2 - more recent linux distributions like Fedora use LVM 2 - then you do not have the 'e2fsadm' utility. So you have to first reduce the filesystem using 'resize2fs' and then reduce the logical volume using 'lvreduce'.

# umount /data# resize2fs /dev/my_vol_grp/my_logical_vol 1G# lvreduce -L 1G /dev/my_vol_grp/my_logical_vol# mount /dataIn the above case, I have reduced my file system "to" 1 GB size ...

Note: I didn't use the minus (-) sign while using resize2fs

... And then used the lvreduce command to reduce the logical volume "to" 1 GB. If I want to reduce the logical volume "by" 1 GB, then I give the same command but with "-L -1G" instead of "-L 1G".

Reiserfs file system

If you have a reiserfs filesystem, then the commands are a bit different than ext2(3).

# umount /data# resize_reiserfs -s -1G /dev/my_vol_grp/my_logical_vol# lvreduce -L -1G /dev/my_vol_grp/my_logical_vol# mount -t reiserfs /dataXFS and JFS filesystems

As of now, there is no way to shrink these filesystems residing on logical volumes.

Grow a Logical Volume

The steps for growing a logical volume are the exact opposite of those for shrinking the logical volume.

1.Enlarge the logical volume first.

2.Then resize the filesystem to the new size of your logical volume.

Update (July 22nd 2005) : I came across this very interesting article on LVM at RedHat Magazine which I found really informative.

Posted by Ravi

Step One : We need to select the physical storage resources that are going to be used for LVM. Typically, these are standard partitions but can also be Linux software RAID volumes that we've created. In LVM terminology, these storage resources are called "physical volumes" (eg: /dev/hda1, /dev/hda2 ... etc).

Our first step in setting up LVM involves properly initializing these partitions so that they can be recognized by the LVM system. This involves setting the correct partition type (usually using the fdisk command, and entering the type of partition as 'Linux LVM' - 0x8e ) if we're adding a physical partition; and then running the pvcreate command.

# pvcreate /dev/hda1 /dev/hda2 /dev/hda3

# pvscan

The above step creates a physical volume from 3 partitions which I want to initialize for inclusion in a volume group.

Step Two : Creating a volume group. You can think of a volume group as a pool of storage that consists of one or more physical volumes. While LVM is running, we can add physical volumes to the volume group or even remove them.

First initialize the /etc/lvmtab and /etc/lvmtab.d files by running the following command:

# vgscan

Now you can create a volume group and assign one or more physical volumes to the volume group.

# vgcreate my_vol_grp /dev/hda1 /dev/hda2

Behind the scenes, the LVM system allocates storage in equal-sized "chunks", called extents. We can specify the particular extent size to use at volume group creation time. The size of an extent defaults to 4Mb, which is perfect for most uses.You can use the -s flag to change the size of the extent. The extent affects the minimum size of changes which can be made to a logical volume in the volume group, and the maximum size of logical and physical volumes in the volume group. A logical volume can contain at most 65534 extents, so the default extent size (4 MB) limits the volume to about 256 GB; a size of 1 TB would require extents of atleast 16 MB. So to accomodate a 1 TB size, the above command can be rewriten as :

# vgcreate -s 16M my_vol_grp /dev/hda1 /dev/hda2

You can check the result of your work at this stage by entering the command:

# vgdisplay

This command displays the total physical extends in a volume group, size of each extent, the allocated size and so on.

Step Three : This step involves the creation of one or more "logical volumes" using our volume group storage pool. The logical volumes are created from volume groups, and may have arbitary names. The size of the new volume may be requested in either extents (-l switch) or in KB, MB, GB or TB ( -L switch) rounding up to whole extents.

# lvcreate -l 50 -n my_logical_vol my_vol_grp

The above command allocates 50 extents of space in my_vol_grp to the newly created my_logical_vol. The -n switch specifies the name of the logical volume we are creating.

Now you can check if you got the desired results by using the command :

# lvdisplay

which shows the information of your newly created logical volume.

Once a logical volume is created, we can go ahead and put a filesystem on it, mount it, and start using the volume to store our files. For creating a filesystem, we do the following:

# mke2fs -j /dev/my_vol_grp/my_logical_vol

The -j signifies journaling support for the ext3 filesystem we are creating.

Mount the newly created file system :

# mount /dev/my_vol_grp/my_logical_vol /data

Also do not forget to append the corresponding line in the /etc/fstab file:

#File: /etc/fstab

/dev/my_vol_grp/my_logical_vol /data ext3 defaults 0 0

Resizing Logical Volumes

This is a continuation of my earlier post Creating Logical Volumes in Linux . Here I will explain how to resize an existing logical volume. Logical volumes may be resized dynamically while preserving the data on the volume. Here is how:

Reducing a logical volume

Reduce the filesystem residing on the logical volume.

Reduce the logical volume.

For different file systems, it is achieved differently.

For ext2 file system

If you are using LVM 1, then both the above steps could be acomplished by executing a single utility called e2fsadm.

# umount /data# e2fsadm -L -1G /dev/my_vol_grp/my_logical_vol# mount /dataThe above command first reduces the filesystem in the 'my_logical_vol' by 1 GB and then reduces the my_logical_vol itself by the same amount.

If you are using LVM 2 - more recent linux distributions like Fedora use LVM 2 - then you do not have the 'e2fsadm' utility. So you have to first reduce the filesystem using 'resize2fs' and then reduce the logical volume using 'lvreduce'.

# umount /data# resize2fs /dev/my_vol_grp/my_logical_vol 1G# lvreduce -L 1G /dev/my_vol_grp/my_logical_vol# mount /dataIn the above case, I have reduced my file system "to" 1 GB size ...

Note: I didn't use the minus (-) sign while using resize2fs

... And then used the lvreduce command to reduce the logical volume "to" 1 GB. If I want to reduce the logical volume "by" 1 GB, then I give the same command but with "-L -1G" instead of "-L 1G".

Reiserfs file system

If you have a reiserfs filesystem, then the commands are a bit different than ext2(3).

# umount /data# resize_reiserfs -s -1G /dev/my_vol_grp/my_logical_vol# lvreduce -L -1G /dev/my_vol_grp/my_logical_vol# mount -t reiserfs /dataXFS and JFS filesystems

As of now, there is no way to shrink these filesystems residing on logical volumes.

Grow a Logical Volume

The steps for growing a logical volume are the exact opposite of those for shrinking the logical volume.

1.Enlarge the logical volume first.

2.Then resize the filesystem to the new size of your logical volume.

Update (July 22nd 2005) : I came across this very interesting article on LVM at RedHat Magazine which I found really informative.

Posted by Ravi

Wednesday, October 21, 2009

Oracle Table Compression

Oracle Table Compression

Oracle employs a very nice compression algorithm on database block level which is quite efficient and can yield threefold compression ratio in most cases.

The table compression is highly recommended for Data Warehouse environments where the operations tend to be read heavy and batch. Table compression is not recommended for OLTP environments or on tables with high OLTP like operations because of the cost associated with DML operations on compressed tables. This is true with Oracle 10g. Oracle 11g has gone wild, and offers OLTP compression as well!

I have found this quite nice short explenation in Oracle Documentation about what Oracle Table compression is:

"...Oracle table compression feature compresses data by eliminating duplicate values in a database block. Compressed data stored in a database block (also known as disk page) is self-contained. That is, all the information needed to re-create the uncompressed data in a block is available within that block. Duplicate values in all the rows and columns in a block are stored once at the beginning of the block, in what is called a symbol table for that block. All occurrences of such values are replaced with a short reference to the symbol table..."

The benefits associated with Table Compression are:

Save space

Faster backups

Query Performance

1. Find out the candidate table to compress

Usually NUM_ROWS will reveal the table in need for compression. In Data Warehouses these are usually fact tables. The fact tables tend to have lots of redundant data in the columns such as keys, data and status flag data. The compression algorithm uses a symbolic table structure to record this redundancy numerically rather than physically and repeatedly a the table level. That is compression on the 'Relational Table' level rather than byte level.

My worked example:

As example we will use the X table, the largest table which I will partition and compress all its partitions except the current partition. The reason we don't compress the current partition is because in the current month partition there will be load of ETL activity (DML) and we do not want to introduce high CPU usage because of compression. Compression is no good when there is lots of DML.

The size of the table before compression is 6GB:

SQL> select segment_name, sum(bytes)/1024/1024 MB from user_Segments where segment_name like 'X'

group by segment_name

SQL> /

SEGMENT_NAME MB

------------------------------ ----------

X 6179

2. List the partitions of the candidate table and decide which to compress

You can list the partition names for the tables you are interested to compress like this:

SQL> set pages 49999

SQL> l

1* select table_name, partition_name, compression from user_tab_partitions where table_name ='X'

SQL> /TABLE_NAME PARTITION_NAME COMPRESS

------------------------------ ------------------------------ --------

...

X P0201 DISABLED

X P0202 DISABLED

...

Then you can write an SQL query to build the command which will move and compress the partitions. Just by altering the property of the table to COMPRESS => ENABLED doesn't compress existing rows in the table. It will compress only the new rows which will be added to the table. To save space we must compress what is there and leave only the current partition and future partitions uncompressed. You must remember to NOT compress the current partition and the future partitions, as the script below will generate commands to compress all partitions. To find out the current partition look at the PARTITION_POSITION in USER_TAB_PARTITIONS, the highest positioned partition is the current partition. You can also order by PARTITION_POSITION as below and don't do the first line.

Generate PARTITION COMPRESS commands with the following script.

SQL> select 'ALTER TABLE 'table_name' MOVE PARTITION 'partition_name ' TABLESPACE NEW_TABLESPACE COMPRESS NOLOGGING PARALLEL; ' from user_tab_partitions where table_name ='X'

order by partition_position desc;

'ALTERTABLE'TABLE_NAME'MOVEPARTITION'PARTITION_NAME'TABLESPACENEW_TABLESPACECOMPRESSNOLOGGINGPARALLEL;'

---------------------------------------------------------------------------------------------------

ALTER TABLE X MOVE PARTITION P0207 TABLESPACE NEW_TABLESPACE COMPRESS NOLOGGING PARALLEL;

ALTER TABLE X MOVE PARTITION P0107 TABLESPACE NEW_TABLESPACE COMPRESS NOLOGGING PARALLEL;

WARNING : It is very important to choose to compress the tables in NEW tablespace as compressing them alone doesn't reorganize the extents in the datafiles in a way for them to be able to be re-sized and shrunk later on.

3. If there are bitmap indexes on the candidate table you must first mark them unusable

WARNING : To be able to apply the commands below the table in question must not have bitmap indexes , if it does you must mark them as unusable otherwise you get ORA-14646 err.

ORA-14646: Specified alter table operation involving compression cannot be performed

in the presence of usable bitmap indexes

See here for more info :http://download-uk.oracle.com/docs/cd/B19306_01/server.102/b14223/parpart.htm#i1006787

You will have to rebuild the indexes after you have finished with compressing the partitions.

4. Run the compression commands

For each partition you wish to compress you are now ready to run the commands like :

ALTER TABLE X MOVE PARTITION P0207 TABLESPACE NEW_TABLESPACE COMPRESS NOLOGGING PARALLEL;

ALTER TABLE X MOVE PARTITION P0107 TABLESPACE NEW_TABLESPACE COMPRESS NOLOGGING PARALLEL;

You can choose to use NOLOGGING for this operation as it generates lots of redo and will affect your archive logging and PARALLEL to make it faster.

After compressing all partitions on the X table the savings realised are as follows:

A 41% saving!

-- Before Compression

SEGMENT_NAME MB

------------------------------ ----------

X 6179

-- After Compression

SEGMENT_NAME MB

------------------------------ ----------

X 3670

5. You must rebuild the BITMAP INDEXES marked UNUSABLE at the previous step 4.

You will have to rebuild all bitmap , and other if any, indexes marked unusable at the previous steps, to do it quickly you ca use NOLOGGING and PARALLEL. Similarly write an SQL statement querying the USER_IND_PARTITIONS table and generate statement like the following for all indexes.

ALTER INDEX my_bitmap_index REBUILD PARTITION P1001 NOLOGGING;

ALTER INDEX my_bitmap_index REBUILD PARTITION P1002 NOLOGGING;

...

This is a one-off operation you won't do it again for the table, ie when you add a new partition.

6. You must rebuild ALL LOCAL INDEXES in the partitioned tables as their state has now become UNUSABLE

After the compression of the table partitions all local indexes associate with the table become UNUSABLE. As the oracle documentation categorically indicates:

If you use the ALTER TABLE ... MOVE statement, the local indexes for a partition become unusable. You have to rebuild them afterwards. Otherwise you will get errors in partition maintenance operations like this:

*

ERROR at line 1:

ORA-01502: index 'my_schema.my_X_table_index' or partition of such index is in unusable

state

You can do that by writing something like this and change the table_name=X for each table.

SQL> select 'alter table X modify partition ' partition_name ' rebuild unusable local indexes;' from user_tab_partitions where table_name='X'

NOTE: You will also have to rebuild indexes whenever you COMPRESS/NOCOMPRESS any partitions. That is, when you use any of these two operations, data moves, and indexes break and have to be rebuild.

7. Check to see the space you have gained.

After moving the table partition segments to their new tablespace and rebuilding necessary indexes you ought to gain some space. You will realize that the space which the objects before compression has decreased. You will require less space to store them, when compressed, in their new tablespace. After you move everything from the old tablespace into the new and you are sure the old tablespace is empty you can then drop the old tablespace and its datafiles.

Well the only approach to 'claim' space back after a table compression operation seems to be the use of ALTER TABLE ... MOVE TABLESPACE 'YOURNEWTABLESPACE' on a 'new' tablespace. Although we can claim physical space back from the database only by resizing datafiles and we could have 'moved' the tables withing their 'own' tablespace, it is not recommended.

Moving the tables within the same tablespace is not a good idea, because you are never sure if the reorganization of the table segments will actually move the HWM of the datafile down so that you can resize the datafile. (see below asktom.oracle.com links for more info)

There might be 'that' extent still hanging at the end of the tablespace which will prevent you from resizing the datafiles. Although you can fish that 'hanging at the end' extent out and reorganize the segment you are better off by moving all to a new tablespace.

Use DBA_SEGMENTS before you decide to drop a tablespace to see if any segments are left in it. Alternativaly you can choose to resize the datafiles to very small files.

I am also reading lately that in Oracle 11g you can get Advanced Table Compression in OLTP environments as well! It is an option which you have to puchase separately in Enterprise Edition though.

References:

Forum Questions to Asktom :

http://asktom.oracle.com/pls/asktom/f?p=100:11:0::::P11_QUESTION_ID:7873209745771#240368600346159666

http://asktom.oracle.com/pls/asktom/f?p=100:11:0::::P11_QUESTION_ID:54178027703899

Oracle Docs :

http://download-uk.oracle.com/docs/cd/B19306_01/server.102/b14223/parpart.htm#sthref196

Oracle employs a very nice compression algorithm on database block level which is quite efficient and can yield threefold compression ratio in most cases.

The table compression is highly recommended for Data Warehouse environments where the operations tend to be read heavy and batch. Table compression is not recommended for OLTP environments or on tables with high OLTP like operations because of the cost associated with DML operations on compressed tables. This is true with Oracle 10g. Oracle 11g has gone wild, and offers OLTP compression as well!

I have found this quite nice short explenation in Oracle Documentation about what Oracle Table compression is:

"...Oracle table compression feature compresses data by eliminating duplicate values in a database block. Compressed data stored in a database block (also known as disk page) is self-contained. That is, all the information needed to re-create the uncompressed data in a block is available within that block. Duplicate values in all the rows and columns in a block are stored once at the beginning of the block, in what is called a symbol table for that block. All occurrences of such values are replaced with a short reference to the symbol table..."

The benefits associated with Table Compression are:

Save space

Faster backups

Query Performance

1. Find out the candidate table to compress

Usually NUM_ROWS will reveal the table in need for compression. In Data Warehouses these are usually fact tables. The fact tables tend to have lots of redundant data in the columns such as keys, data and status flag data. The compression algorithm uses a symbolic table structure to record this redundancy numerically rather than physically and repeatedly a the table level. That is compression on the 'Relational Table' level rather than byte level.

My worked example:

As example we will use the X table, the largest table which I will partition and compress all its partitions except the current partition. The reason we don't compress the current partition is because in the current month partition there will be load of ETL activity (DML) and we do not want to introduce high CPU usage because of compression. Compression is no good when there is lots of DML.

The size of the table before compression is 6GB:

SQL> select segment_name, sum(bytes)/1024/1024 MB from user_Segments where segment_name like 'X'

group by segment_name

SQL> /

SEGMENT_NAME MB

------------------------------ ----------

X 6179

2. List the partitions of the candidate table and decide which to compress

You can list the partition names for the tables you are interested to compress like this:

SQL> set pages 49999

SQL> l

1* select table_name, partition_name, compression from user_tab_partitions where table_name ='X'

SQL> /TABLE_NAME PARTITION_NAME COMPRESS

------------------------------ ------------------------------ --------

...

X P0201 DISABLED

X P0202 DISABLED

...

Then you can write an SQL query to build the command which will move and compress the partitions. Just by altering the property of the table to COMPRESS => ENABLED doesn't compress existing rows in the table. It will compress only the new rows which will be added to the table. To save space we must compress what is there and leave only the current partition and future partitions uncompressed. You must remember to NOT compress the current partition and the future partitions, as the script below will generate commands to compress all partitions. To find out the current partition look at the PARTITION_POSITION in USER_TAB_PARTITIONS, the highest positioned partition is the current partition. You can also order by PARTITION_POSITION as below and don't do the first line.

Generate PARTITION COMPRESS commands with the following script.

SQL> select 'ALTER TABLE 'table_name' MOVE PARTITION 'partition_name ' TABLESPACE NEW_TABLESPACE COMPRESS NOLOGGING PARALLEL; ' from user_tab_partitions where table_name ='X'

order by partition_position desc;

'ALTERTABLE'TABLE_NAME'MOVEPARTITION'PARTITION_NAME'TABLESPACENEW_TABLESPACECOMPRESSNOLOGGINGPARALLEL;'

---------------------------------------------------------------------------------------------------

ALTER TABLE X MOVE PARTITION P0207 TABLESPACE NEW_TABLESPACE COMPRESS NOLOGGING PARALLEL;

ALTER TABLE X MOVE PARTITION P0107 TABLESPACE NEW_TABLESPACE COMPRESS NOLOGGING PARALLEL;

WARNING : It is very important to choose to compress the tables in NEW tablespace as compressing them alone doesn't reorganize the extents in the datafiles in a way for them to be able to be re-sized and shrunk later on.

3. If there are bitmap indexes on the candidate table you must first mark them unusable

WARNING : To be able to apply the commands below the table in question must not have bitmap indexes , if it does you must mark them as unusable otherwise you get ORA-14646 err.

ORA-14646: Specified alter table operation involving compression cannot be performed

in the presence of usable bitmap indexes

See here for more info :http://download-uk.oracle.com/docs/cd/B19306_01/server.102/b14223/parpart.htm#i1006787

You will have to rebuild the indexes after you have finished with compressing the partitions.

4. Run the compression commands

For each partition you wish to compress you are now ready to run the commands like :

ALTER TABLE X MOVE PARTITION P0207 TABLESPACE NEW_TABLESPACE COMPRESS NOLOGGING PARALLEL;

ALTER TABLE X MOVE PARTITION P0107 TABLESPACE NEW_TABLESPACE COMPRESS NOLOGGING PARALLEL;

You can choose to use NOLOGGING for this operation as it generates lots of redo and will affect your archive logging and PARALLEL to make it faster.

After compressing all partitions on the X table the savings realised are as follows:

A 41% saving!

-- Before Compression

SEGMENT_NAME MB

------------------------------ ----------

X 6179

-- After Compression

SEGMENT_NAME MB

------------------------------ ----------

X 3670

5. You must rebuild the BITMAP INDEXES marked UNUSABLE at the previous step 4.

You will have to rebuild all bitmap , and other if any, indexes marked unusable at the previous steps, to do it quickly you ca use NOLOGGING and PARALLEL. Similarly write an SQL statement querying the USER_IND_PARTITIONS table and generate statement like the following for all indexes.

ALTER INDEX my_bitmap_index REBUILD PARTITION P1001 NOLOGGING;

ALTER INDEX my_bitmap_index REBUILD PARTITION P1002 NOLOGGING;

...

This is a one-off operation you won't do it again for the table, ie when you add a new partition.

6. You must rebuild ALL LOCAL INDEXES in the partitioned tables as their state has now become UNUSABLE

After the compression of the table partitions all local indexes associate with the table become UNUSABLE. As the oracle documentation categorically indicates:

If you use the ALTER TABLE ... MOVE statement, the local indexes for a partition become unusable. You have to rebuild them afterwards. Otherwise you will get errors in partition maintenance operations like this:

*

ERROR at line 1:

ORA-01502: index 'my_schema.my_X_table_index' or partition of such index is in unusable

state

You can do that by writing something like this and change the table_name=X for each table.

SQL> select 'alter table X modify partition ' partition_name ' rebuild unusable local indexes;' from user_tab_partitions where table_name='X'

NOTE: You will also have to rebuild indexes whenever you COMPRESS/NOCOMPRESS any partitions. That is, when you use any of these two operations, data moves, and indexes break and have to be rebuild.

7. Check to see the space you have gained.

After moving the table partition segments to their new tablespace and rebuilding necessary indexes you ought to gain some space. You will realize that the space which the objects before compression has decreased. You will require less space to store them, when compressed, in their new tablespace. After you move everything from the old tablespace into the new and you are sure the old tablespace is empty you can then drop the old tablespace and its datafiles.

Well the only approach to 'claim' space back after a table compression operation seems to be the use of ALTER TABLE ... MOVE TABLESPACE 'YOURNEWTABLESPACE' on a 'new' tablespace. Although we can claim physical space back from the database only by resizing datafiles and we could have 'moved' the tables withing their 'own' tablespace, it is not recommended.

Moving the tables within the same tablespace is not a good idea, because you are never sure if the reorganization of the table segments will actually move the HWM of the datafile down so that you can resize the datafile. (see below asktom.oracle.com links for more info)

There might be 'that' extent still hanging at the end of the tablespace which will prevent you from resizing the datafiles. Although you can fish that 'hanging at the end' extent out and reorganize the segment you are better off by moving all to a new tablespace.

Use DBA_SEGMENTS before you decide to drop a tablespace to see if any segments are left in it. Alternativaly you can choose to resize the datafiles to very small files.

I am also reading lately that in Oracle 11g you can get Advanced Table Compression in OLTP environments as well! It is an option which you have to puchase separately in Enterprise Edition though.

References:

Forum Questions to Asktom :

http://asktom.oracle.com/pls/asktom/f?p=100:11:0::::P11_QUESTION_ID:7873209745771#240368600346159666

http://asktom.oracle.com/pls/asktom/f?p=100:11:0::::P11_QUESTION_ID:54178027703899

Oracle Docs :

http://download-uk.oracle.com/docs/cd/B19306_01/server.102/b14223/parpart.htm#sthref196

Wednesday, August 5, 2009

How to Enable Flashback Database

1)Configure the Database in archivelog mode.

A)See the current archiving mode of the database.

SQL>select log_mode from v$database;

B)Perform clean shutdown of the database.

shutdown immediate or,

shutdown transactional or,

shutdown normal

You cannot change the mode from ARCHIVELOG to NOARCHIVELOG if any datafiles need media recovery.

C)Backup the Database.

D)If you use pfile as initialization file then edit the archive destination parameter (like LOG_ARCHIVE_DEST) as your archival destination. If you use spfile ignore this step.

E)Mount the database but don't open.

STARTUP MOUNT

F)Change the archival mode and open the database.

ALTER DATABASE ARCHIVELOG

If you use spfile then you can use ALTER SYSTEM SET LOG_ARCHIVE_DEST='your location'

ALTER DATABASE OPEN;

G)Check the archival Location

archive log list

H)Shutdown and Backup the database.

SHUTDOWN IMMEDIATE

2)Configure Flash Recovery Area.

A)Set up DB_RECOVERY_FILE_DEST_SIZE:

SQL> alter system set db_recovery_file_dest_size=2G;

B)Decide the area from OS where you will place Flash recovery area.

SQL>host mkdir /oradata1/flash_recovery_area

C)Set up DB_RECOVERY_FILE_DEST:

SQL> alter system set db_recovery_file_dest='/oradata1/flash_recovery_area';

3)Shutdown and mount the database.

SQL>SHUTDOWN IMMEDIAT;

SQL>STARTUP MOUNT:

SQL>Alter Database Flashback ON;

SQL> select flashback_on from v$database;

4)Open the database and optionally you can set DB_FLASHBACK_RETENTION_TARGET to

the length of the desired flashback window in minutes.

SQL> ALTER DATABASE OPEN;

SQL> ALTER SYSTEM SET DB_FLASHBACK_RETENTION_TARGET=4320;

SQL> show parameter DB_FLASHBACK_RETENTION_TARGET;

NOTE:

The V$RECOVERY_FILE_DEST and V$FLASH_RECOVERY_AREA_USAGE views can help to find out the current location, disk quota, space in use, space reclaimable by deleting files,total number of files, the percentage of the total disk quota used by different types of files, and how much space for each type of file can be reclaimed by deleting files that are obsolete, redundant, or already backed up to tape.

In order to disable flash recovery area issue,

ALTER SYSTEM SET DB_RECOVERY_FILE_DEST='' SCOPE=BOTH SID="*";

However you can disable Flashback Database for a tablespace.Then you must take its datafiles offline before running FLASHBACK DATABASE.

Like,

SQL> select file_name,file_id from dba_data_files where tablespace_name='TEST';

FILE_NAME FILE_ID

------------------------------ ----------

/oradata2/1.dbf 5

SQL> alter database datafile 5 offline;

Database altered.

SQL> ALTER TABLESPACE test flashback off;

Tablespace altered.

SQL> recover datafile 5;

Media recovery complete.

SQL> alter database datafile 5 online;

Database altered.

To disable flashback feature simply issue,

SQL>ALTER DATABASE FLASHBACK OFF;

Database altered.

Mohammad Abdul Momin Arju

A)See the current archiving mode of the database.

SQL>select log_mode from v$database;

B)Perform clean shutdown of the database.

shutdown immediate or,

shutdown transactional or,

shutdown normal

You cannot change the mode from ARCHIVELOG to NOARCHIVELOG if any datafiles need media recovery.

C)Backup the Database.

D)If you use pfile as initialization file then edit the archive destination parameter (like LOG_ARCHIVE_DEST) as your archival destination. If you use spfile ignore this step.

E)Mount the database but don't open.

STARTUP MOUNT

F)Change the archival mode and open the database.

ALTER DATABASE ARCHIVELOG

If you use spfile then you can use ALTER SYSTEM SET LOG_ARCHIVE_DEST='your location'

ALTER DATABASE OPEN;

G)Check the archival Location

archive log list

H)Shutdown and Backup the database.

SHUTDOWN IMMEDIATE

2)Configure Flash Recovery Area.

A)Set up DB_RECOVERY_FILE_DEST_SIZE:

SQL> alter system set db_recovery_file_dest_size=2G;

B)Decide the area from OS where you will place Flash recovery area.

SQL>host mkdir /oradata1/flash_recovery_area

C)Set up DB_RECOVERY_FILE_DEST:

SQL> alter system set db_recovery_file_dest='/oradata1/flash_recovery_area';

3)Shutdown and mount the database.

SQL>SHUTDOWN IMMEDIAT;

SQL>STARTUP MOUNT:

SQL>Alter Database Flashback ON;

SQL> select flashback_on from v$database;

4)Open the database and optionally you can set DB_FLASHBACK_RETENTION_TARGET to

the length of the desired flashback window in minutes.

SQL> ALTER DATABASE OPEN;

SQL> ALTER SYSTEM SET DB_FLASHBACK_RETENTION_TARGET=4320;

SQL> show parameter DB_FLASHBACK_RETENTION_TARGET;

NOTE:

The V$RECOVERY_FILE_DEST and V$FLASH_RECOVERY_AREA_USAGE views can help to find out the current location, disk quota, space in use, space reclaimable by deleting files,total number of files, the percentage of the total disk quota used by different types of files, and how much space for each type of file can be reclaimed by deleting files that are obsolete, redundant, or already backed up to tape.

In order to disable flash recovery area issue,

ALTER SYSTEM SET DB_RECOVERY_FILE_DEST='' SCOPE=BOTH SID="*";

However you can disable Flashback Database for a tablespace.Then you must take its datafiles offline before running FLASHBACK DATABASE.

Like,

SQL> select file_name,file_id from dba_data_files where tablespace_name='TEST';

FILE_NAME FILE_ID

------------------------------ ----------

/oradata2/1.dbf 5

SQL> alter database datafile 5 offline;

Database altered.

SQL> ALTER TABLESPACE test flashback off;

Tablespace altered.

SQL> recover datafile 5;

Media recovery complete.

SQL> alter database datafile 5 online;

Database altered.

To disable flashback feature simply issue,

SQL>ALTER DATABASE FLASHBACK OFF;

Database altered.

Mohammad Abdul Momin Arju

Thursday, July 30, 2009

Setting NLS_LANG for Oracle

NLS_LANG= language_territory.character set

Example:

export NLS_LANG=AMERICAN_AMERICA.WE8ISO8859P1

Setting NLS_LANG tells Oracle what characterset the client is using so Oracle can do conversion if needed from client’s characterset to the database characterset and setting this parameter on the client does not change the client’s characterset. Setting Language and Territory in NLS_LANG has nothing to do with storing characters in database, it’s controlled by the characterset and of course if the database can store that characterset.

To check session NLS session parameters, note this doesn’t return the characterset set by NLS_LANG

SELECT * FROM NLS_SESSION_PARAMETERS;

SELECT USERENV ('language') FROM DUAL;

To find the NLS_LANG of your database one can run the following SQL:

SQL> SELECT * from NLS_DATABASE_PARAMETERS WHERE parameter IN ( 'NLS_LANGUAGE', 'NLS_TERRITORY', 'NLS_CHARACTERSET');

PARAMETER VALUE

—————————— —————————————-

NLS_LANGUAGE AMERICAN

NLS_TERRITORY AMERICA

NLS_CHARACTERSET WE8MSWIN1252

To get the possible value for language, territory and characterset you can check the view V$NLS_VALID_VALUES.

– parameter – possible values LANGUAGE, TERRITORY, CHARACTERSET

SELECT parameter, value FROM V$NLS_VALID_VALUES;

To change the client language

export NLS_LANG=BELGIUM_.WE8ISO8859P1

To change the client territory

export NLS_LANG=_BELGIUM.WE8ISO8859P1

To change the client characterset

export NLS_LANG=.AL32UTF8

Amin Jaffer

Example:

export NLS_LANG=AMERICAN_AMERICA.WE8ISO8859P1

Setting NLS_LANG tells Oracle what characterset the client is using so Oracle can do conversion if needed from client’s characterset to the database characterset and setting this parameter on the client does not change the client’s characterset. Setting Language and Territory in NLS_LANG has nothing to do with storing characters in database, it’s controlled by the characterset and of course if the database can store that characterset.

To check session NLS session parameters, note this doesn’t return the characterset set by NLS_LANG

SELECT * FROM NLS_SESSION_PARAMETERS;

SELECT USERENV ('language') FROM DUAL;

To find the NLS_LANG of your database one can run the following SQL:

SQL> SELECT * from NLS_DATABASE_PARAMETERS WHERE parameter IN ( 'NLS_LANGUAGE', 'NLS_TERRITORY', 'NLS_CHARACTERSET');

PARAMETER VALUE

—————————— —————————————-

NLS_LANGUAGE AMERICAN

NLS_TERRITORY AMERICA

NLS_CHARACTERSET WE8MSWIN1252

To get the possible value for language, territory and characterset you can check the view V$NLS_VALID_VALUES.

– parameter – possible values LANGUAGE, TERRITORY, CHARACTERSET

SELECT parameter, value FROM V$NLS_VALID_VALUES;

To change the client language

export NLS_LANG=BELGIUM_.WE8ISO8859P1

To change the client territory

export NLS_LANG=_BELGIUM.WE8ISO8859P1

To change the client characterset

export NLS_LANG=.AL32UTF8

Amin Jaffer

Wednesday, July 29, 2009

HOWTO: Formatting and partitioning your external usb hard drive in LINUX

Say the disk is at /dev/sdc (and not mounted)

sudo parted -i /dev/sdc

mklabel msdos

mkpart primary 0% 100% (makes 1 primary partition span entire disk)

mkfs 1 fat32 (puts a FAT32 filesystem on that partition)

quit

Then you should reformat the disk and check for bad blocks (unmount first if necessary)

sudo mkfs.vfat -c -L label -F 32 /dev/sdc1

note: label can be anything you want up to 11 characters, no spaces.

If you want to go nuts with the check, specify -c twice,

that will write/read 4 bit patterns to the entire drive, giving it a real workout.

or

mkfs.vfat /dev/sdc1

sudo parted -i /dev/sdc

mklabel msdos

mkpart primary 0% 100% (makes 1 primary partition span entire disk)

mkfs 1 fat32 (puts a FAT32 filesystem on that partition)

quit

Then you should reformat the disk and check for bad blocks (unmount first if necessary)

sudo mkfs.vfat -c -L label -F 32 /dev/sdc1

note: label can be anything you want up to 11 characters, no spaces.

If you want to go nuts with the check, specify -c twice,

that will write/read 4 bit patterns to the entire drive, giving it a real workout.

or

mkfs.vfat /dev/sdc1

Tuesday, July 7, 2009

Copying Data from One Database to Another

SET ARRAYSIZE 50

SET LONG 1000

SET COPYCOMMIT 100 /* To set commit size */

EXP1:

COPY FROM USER1/USER@SID1 TO USER2/USER2@SID2 INSERT TABLE USING SELECT * FROM TABLEA where ....;

EXP2:

COPY FROM USER1/USER@SID1 TO USER2/USER2@SID2

CREATE NEWTABLE (DEPARTMENT_ID, DEPARTMENT_NAME, CITY)

USING SELECT * FROM EMP_DETAILS_VIEW Where.....;

SET LONG 1000

SET COPYCOMMIT 100 /* To set commit size */

EXP1:

COPY FROM USER1/USER@SID1 TO USER2/USER2@SID2 INSERT TABLE USING SELECT * FROM TABLEA where ....;

EXP2:

COPY FROM USER1/USER@SID1 TO USER2/USER2@SID2

CREATE NEWTABLE (DEPARTMENT_ID, DEPARTMENT_NAME, CITY)

USING SELECT * FROM EMP_DETAILS_VIEW Where.....;

Monday, July 6, 2009

To Start,Stop,Restart crontab service in Linux

/sbin/service crond start

/sbin/service crond stop

/sbin/service crond restart

/sbin/service crond stop

/sbin/service crond restart

Sunday, June 14, 2009

Restore table drop by mistake

If there is table drop by mistake

1. Performing the incomplete recovery manually

Bring all offline datafiles online

SQL> select name,status from v$datafile ;

SQL>shutdown immediate ;

Perform complete close backup ,by copying the database file to another location this

backup must be taken before performing incomplete recovery .

SQL>startup mount;

SQL>alter session set NLS_DATE_FORMAT=’DD-MON-YYYY HH24:MI:SS’ ; this is optional

SQL>recover database until time ’07-DES-2008 10:00:00’ ;

SQL>alter database open resetlogs ;

Now you can see the deleted table,,,,,,

2. Incomplete recovery using RMAN

The set until command can take one of the following forms

• SET UNTIL TIME ‘DD MON YYYY HH:MI:SS’

• SET UNTIL LOG SEQUENCE n

• SET UNTIL SCN n

Using The LogMiner utility to determine the SCN(system change number)

The logminer utility used to analyze redo log files ,and the following steps describe how you can read the contents of redo log file

SQL>alter system set UTL_FILE_DIR=’c:\LOGMINIER’ SCOPE=SPFILE ;

SQL>SHUTDOWN IMMEDIATE ;

SQL>STARTUP ;

Create directory called LOGMINIER in c:\ drive

SQL>EXECUTE DBMS_LOGMINR_D.BUILD(‘LOGDICT.ORA’,’C:\LOGMINER’) ;

To see the log files

SQL>select member from v$logfile

MEMBER

D:\ORACLE\ORADATA\BOSST\REDO01.LOG

D:\ORACLE\ORADATA\BOSST\REDO02.LOG

D:\ORACLE\ORADATA\BOSST\REDO03.LOG

D:\ORACLE\ORADATA\BOSST\REDO04.LOG

SQL>EXECUTE DBMS_LOGMINR.ADD_LOGFILE(LOGFILENAME=>’D:\ORACLE\ORADATA\BOSST\REDO01.LOG’ ,OPTIONS => DBMS_LOGMNR.NEW) ;

SQL>EXECUTE DBMS_LOGMINR.ADD_LOGFILE(LOGFILENAME=>'D:\ORACLE\ORADATA\BOSST\REDO02.LOG’ ,OPTIONS => DBMS_LOGMNR.ADDFILE) ; etc…

Next start the mining process using DBMS_LOGMNR.START_LOGMNR procedure .The DDL_DICT_TRACKING option that mean you will track DDL command In the redo log file

SQL> DBMS_LOGMNR.START_LOGMNR(OPTIONS=>DBMS_LOGMNR .DDL_DICT_TRACKING) ;

SQL>select SCN ,SQL_REDO FROM V$LOGMNR_CONTENTS WHERE SEG_NAME =’TABLE NAME’;

Now you can get the SCN , and after that you can stop the mining session by

executing this command

SQL>EXECUTE DBMS_LOGMNR.END_LOGMNR ;

SQL> select file# ,status from v$datafile ;

If there is any file offline you must bring them online by this command

SQL>ALTER DATABASE DATAFILE ONLINE;

Shutdown database and perform complete backup

Connect to RMAN and to the target database

RMAN>STARTUP MOUNT

RMAN>RUN{

ALLOCATE CHANNEL C1 TYPE DISK ;

SET UNTIL SCN 8767887 ;

RESTORE DATABASE;

RECOVER DATABASE ;

ALTER DATABASE OPEN RESETLOGS;

}

Now you can see the dropped table

1. Performing the incomplete recovery manually

Bring all offline datafiles online

SQL> select name,status from v$datafile ;

SQL>shutdown immediate ;

Perform complete close backup ,by copying the database file to another location this

backup must be taken before performing incomplete recovery .

SQL>startup mount;

SQL>alter session set NLS_DATE_FORMAT=’DD-MON-YYYY HH24:MI:SS’ ; this is optional

SQL>recover database until time ’07-DES-2008 10:00:00’ ;

SQL>alter database open resetlogs ;

Now you can see the deleted table,,,,,,

2. Incomplete recovery using RMAN

The set until command can take one of the following forms

• SET UNTIL TIME ‘DD MON YYYY HH:MI:SS’

• SET UNTIL LOG SEQUENCE n

• SET UNTIL SCN n

Using The LogMiner utility to determine the SCN(system change number)

The logminer utility used to analyze redo log files ,and the following steps describe how you can read the contents of redo log file

SQL>alter system set UTL_FILE_DIR=’c:\LOGMINIER’ SCOPE=SPFILE ;

SQL>SHUTDOWN IMMEDIATE ;

SQL>STARTUP ;

Create directory called LOGMINIER in c:\ drive

SQL>EXECUTE DBMS_LOGMINR_D.BUILD(‘LOGDICT.ORA’,’C:\LOGMINER’) ;

To see the log files

SQL>select member from v$logfile

MEMBER

D:\ORACLE\ORADATA\BOSST\REDO01.LOG

D:\ORACLE\ORADATA\BOSST\REDO02.LOG

D:\ORACLE\ORADATA\BOSST\REDO03.LOG

D:\ORACLE\ORADATA\BOSST\REDO04.LOG

SQL>EXECUTE DBMS_LOGMINR.ADD_LOGFILE(LOGFILENAME=>’D:\ORACLE\ORADATA\BOSST\REDO01.LOG’ ,OPTIONS => DBMS_LOGMNR.NEW) ;

SQL>EXECUTE DBMS_LOGMINR.ADD_LOGFILE(LOGFILENAME=>'D:\ORACLE\ORADATA\BOSST\REDO02.LOG’ ,OPTIONS => DBMS_LOGMNR.ADDFILE) ; etc…

Next start the mining process using DBMS_LOGMNR.START_LOGMNR procedure .The DDL_DICT_TRACKING option that mean you will track DDL command In the redo log file

SQL> DBMS_LOGMNR.START_LOGMNR(OPTIONS=>DBMS_LOGMNR .DDL_DICT_TRACKING) ;

SQL>select SCN ,SQL_REDO FROM V$LOGMNR_CONTENTS WHERE SEG_NAME =’TABLE NAME’;

Now you can get the SCN , and after that you can stop the mining session by

executing this command

SQL>EXECUTE DBMS_LOGMNR.END_LOGMNR ;

SQL> select file# ,status from v$datafile ;

If there is any file offline you must bring them online by this command

SQL>ALTER DATABASE DATAFILE

Shutdown database and perform complete backup

Connect to RMAN and to the target database

RMAN>STARTUP MOUNT

RMAN>RUN{

ALLOCATE CHANNEL C1 TYPE DISK ;

SET UNTIL SCN 8767887 ;

RESTORE DATABASE;

RECOVER DATABASE ;

ALTER DATABASE OPEN RESETLOGS;

}

Now you can see the dropped table

Tuesday, June 9, 2009

Changing Oracle Database Character Set to Arabic

SHUTDOWN IMMEDIATE;

STARTUP MOUNT;

ALTER SYSTEM ENABLE RESTRICTED SESSION;

ALTER SYSTEM SET JOB_QUEUE_PROCESSES=0;

ALTER SYSTEM SET AQ_TM_PROCESSES=0;

ALTER DATABASE OPEN;

ALTER DATABASE CHARACTER SET INTERNAL_USE AR8MSWIN1256;

SHUTDOWN IMMEDIATE;

STARTUP;

STARTUP MOUNT;

ALTER SYSTEM ENABLE RESTRICTED SESSION;

ALTER SYSTEM SET JOB_QUEUE_PROCESSES=0;

ALTER SYSTEM SET AQ_TM_PROCESSES=0;

ALTER DATABASE OPEN;

ALTER DATABASE CHARACTER SET INTERNAL_USE AR8MSWIN1256;

SHUTDOWN IMMEDIATE;

STARTUP;

Monday, June 8, 2009

Sunday, May 31, 2009

Change Serial Number for Windows XP

If you need to change Serial Number (Product Key) for Windows XP for various reasons (maybe you find that your key is pirated and want to enter a new ok one) follow next steps:

1.Click on Start and then Run.

2.In the text box in the Run window, type regedit and click OK. This will open the Registry Editor program.

3.Locate the HKEY_LOCAL_MACHINE folder under My Computer and click on the (+) sign next the folder name to expand the folder.

4.Continue to expand folders until you reach the HKEY_LOCAL_MACHINE\Software\Microsoft\WindowsNT\Current Version\WPAEvents registry key.

5.Click on the WPAEvents folder.

6.In the results that appear in the window on the right, locate OOBETimer.

7.Right-click on the OOBETimer entry and choose Modify from the resulting menu.

8.Change at least one digit in the Value data text box and click OK. This will deactivate Windows XP.

9.Click on Start and then Run.

10.In the text box in the Run window, type the following command and click OK.

%systemroot%\system32\oobe\msoobe.exe /a

11.When the Windows Product Activation window appears, choose Yes, I want to telephone a customer service representative to activate Windows and then click Next.

12.Click Change Product Key.

13.Type your new, valid Windows XP product key in the New key text boxes and then click Update.

14.If you’re taken back to the previous screen and prompted, choose Remind me later.

15.Restart your PC.

16.Repeat steps 9 and 10 to verify that Windows is activated. You receive the following message:Windows is already activated. Click OK to exit.

17.Click OK.

1.Click on Start and then Run.

2.In the text box in the Run window, type regedit and click OK. This will open the Registry Editor program.

3.Locate the HKEY_LOCAL_MACHINE folder under My Computer and click on the (+) sign next the folder name to expand the folder.

4.Continue to expand folders until you reach the HKEY_LOCAL_MACHINE\Software\Microsoft\WindowsNT\Current Version\WPAEvents registry key.

5.Click on the WPAEvents folder.

6.In the results that appear in the window on the right, locate OOBETimer.

7.Right-click on the OOBETimer entry and choose Modify from the resulting menu.

8.Change at least one digit in the Value data text box and click OK. This will deactivate Windows XP.

9.Click on Start and then Run.

10.In the text box in the Run window, type the following command and click OK.

%systemroot%\system32\oobe\msoobe.exe /a

11.When the Windows Product Activation window appears, choose Yes, I want to telephone a customer service representative to activate Windows and then click Next.

12.Click Change Product Key.

13.Type your new, valid Windows XP product key in the New key text boxes and then click Update.

14.If you’re taken back to the previous screen and prompted, choose Remind me later.

15.Restart your PC.

16.Repeat steps 9 and 10 to verify that Windows is activated. You receive the following message:Windows is already activated. Click OK to exit.

17.Click OK.

Monday, May 25, 2009

Tuesday, May 19, 2009

Delete Archive Log Files From RMAN

to list all avilable archive log file

from this command we will able to know the sequence of the archive log file

from RMAN>list archivelog all;

from RMAN>DELETE ARCHIVELOG SEQUENCE between SEQ... AND SEQ...n ;

from this command we will able to know the sequence of the archive log file

from RMAN>list archivelog all;

from RMAN>DELETE ARCHIVELOG SEQUENCE between SEQ... AND SEQ...n ;

Wednesday, May 13, 2009

Oracle Statspack Survival Guide

Oracle Statspack Survival Guide

________________________________________

Overview

STATSPACK is a performance diagnosis tool, available since Oracle8i. STATSPACK can be considered BSTAT/ESTAT's successor, incorporating many new features. STATSPACK is a diagnosis tool for instance-wide performance problems; it also supports application tuning activities by providing data which identifies high-load SQL statements. STATSPACK can be used both proactively to monitor the changing load on a system, and also reactively to investigate a performance problem.

Remember to set timed_statistics to true for your instance. Setting this parameter provides timing data which is invaluable for performance tuning.

The «more is better» approach is not always better!

The single most common misuse of STATSPACK is the «more is better» approach. Often STATSPACK reports spans hours or even days. The times between the snapshots (the collection points) should, in general, be measured in minutes, not hours and never days.

The STATSPACK reports we like are from 1 5-minute intervals during a busy or peak time, when the performance is at its worst. That provides a very focused look at what was going wrong at that exact moment in time. The problem with a very large STATSPACK snapshot window, where the time between the two snapshots is measured in hours, is that the events that caused serious performance issues for 20 minutes during peak processing don't look so bad when they're spread out over an 8-hour window. It's also true with STATSPACK that measuring things over too long of a period tends to level them out over time. Nothing will stand out and strike you as being wrong. So, when taking snapshots, schedule them about 15 to 30 minutes (maximum) apart. You might wait 3 or 4 hours between these two observations, but you should always do them in pairs and within minutes of each other.

«Having a history of the good times is just as important as having a history of the bad; you need both»

Another common mistake with STATSPACK is to gather snapshots only when there is a problem. That is fine to a point, but how much better would it be to have a STATSPACK report from when things were going good to compare it with when things are bad. A simple STATSPACK report that shows a tremendous increase in physical 1/0 activity or table scans (long tables) could help you track down that missing index. Or, if you see your soft parse percentage value went from 99% to 70%, you know that someone introduced a new feature into the system that isn't using bind variables (and is killing you). Having a history of the good times is just as important as having a history of the bad; you need both.

Architecture

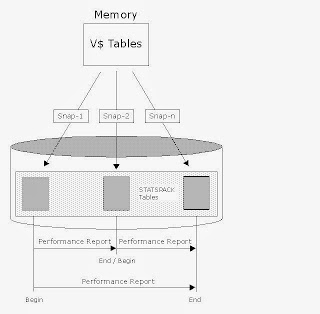

To fully understand the STATSPACK architecture, we have to look at the basic nature of the STATSPACK utility. The STATSPACK utility is an outgrowth of the Oracle UTLBSTAT and UTLESTAT utilities, which have been used with Oracle since the very earliest versions.

UTLBSTAT - UTLESTAT

The BSTAT-ESTAT utilities capture information directly from the Oracle's in-memory structures and then compare the information from two snapshots in order to produce an elapsed-time report showing the activity of the database. If we look inside utlbstat.sql and utlestat.sql, we see the SQL that samples directly from the view: V$SYSSTAT;

insert into stats$begin_stats select * from v$sysstat;

insert into stats$end_stats select * from v$sysstat;

STATSPACK

When a snapshot is executed, the STATSPACK software will sample from the RAM in-memory structures inside the SGA and transfer the values into the corresponding STATSPACK tables. These values are then available for comparing with other snapshots.

Note that in most cases, there is a direct correspondence between the v$ view in the SGA and the corresponding STATSPACK table. For example, we see that the stats$sysstat table is similar to the v$sysstat view.

SQL> desc v$sysstat;

Name Null? Type

----------------------------------------- -------- -----------------------

STATISTIC# NUMBER

NAME VARCHAR2(64)

CLASS NUMBER

VALUE NUMBER

STAT_ID NUMBER

SQL> desc stats$sysstat;

Name Null? Type

----------------------------------------- -------- -----------------------

SNAP_ID NOT NULL NUMBER

DBID NOT NULL NUMBER

INSTANCE_NUMBER NOT NULL NUMBER

STATISTIC# NOT NULL NUMBER

NAME NOT NULL VARCHAR2(64)

VALUE NUMBER

It is critical to your understanding of the STATSPACK utility that you realize the information captured by a STATSPACK snapshot is accumulated values. The information from the V$VIEWS collects database information at startup time and continues to add the values until the instance is shutdown. In order to get a meaningful elapsed-time report, you must run a STATSPACK report that compares two snapshots as shown above. It is critical to understand that a report will be invalid if the database is shut down between snapshots. This is because all of the accumulated values will be reset, causing the second snapshot to have smaller values than the first snapshot.

Installing and Configuring STATSPACK

Create PERFSTAT Tablespace

The STATSPACK utility requires an isolated tablespace to obtain all of the objects and data. For uniformity, it is suggested that the tablespace be called PERFSTAT, the same name as the schema owner for the STATSPACK tables. It is important to closely watch the STATSPACK data to ensure that the stats$sql_summary table is not taking an inordinate amount of space.

SQL> CREATE TABLESPACE perfstat

DATAFILE '/u01/oracle/db/AKI1_perfstat.dbf' SIZE 1000M REUSE

EXTENT MANAGEMENT LOCAL UNIFORM SIZE 512K

SEGMENT SPACE MANAGEMENT AUTO

PERMANENT

ONLINE;

Run the Create Scripts

Now that the tablespace exists, we can begin the installation process of the STATSPACK software. Note that you must have performed the following before attempting to install STATSPACK.

Run catdbsyn.sql as SYS

Run dbmspool.sql as SYS

$ cd $ORACLE_HOME/rdbms/admin

$ sqlplus "/ as sysdba"

SQL> start spcreate.sql

Choose the PERFSTAT user's password

-----------------------------------

Not specifying a password will result in the installation FAILING

Enter value for perfstat_password: perfstat

Choose the Default tablespace for the PERFSTAT user

---------------------------------------------------

Below is the list of online tablespaces in this database which can

store user data. Specifying the SYSTEM tablespace for the user's

default tablespace will result in the installation FAILING, as

using SYSTEM for performance data is not supported.

Choose the PERFSTAT users's default tablespace. This is the tablespace

in which the STATSPACK tables and indexes will be created.

TABLESPACE_NAME CONTENTS STATSPACK DEFAULT TABLESPACE

------------------------------ --------- ----------------------------

PERFSTAT PERMANENT

SYSAUX PERMANENT *

USERS PERMANENT

Pressing

tablespace (identified by *) being used.

Enter value for default_tablespace: PERFSTAT

Choose the Temporary tablespace for the PERFSTAT user

-----------------------------------------------------

Below is the list of online tablespaces in this database which can

store temporary data (e.g. for sort workareas). Specifying the SYSTEM

tablespace for the user's temporary tablespace will result in the

installation FAILING, as using SYSTEM for workareas is not supported.

Choose the PERFSTAT user's Temporary tablespace.

TABLESPACE_NAME CONTENTS DB DEFAULT TEMP TABLESPACE

------------------------------ --------- --------------------------

TEMP TEMPORARY *

Pressing

tablespace (identified by *) being used.

Enter value for temporary_tablespace: TEMP

.....

.....

Creating Package STATSPACK...

Package created.

No errors.

Creating Package Body STATSPACK...

Package body created.

No errors.

NOTE:

SPCPKG complete. Please check spcpkg.lis for any errors.

Check the Logfiles: spcpkg.lis, spctab.lis, spcusr.lis

Adjusting the STATSPACK Collection Level

STATSPACK has two types of collection options, level and threshold. The level parameter controls the type of data collected from Oracle, while the threshold parameter acts as a filter for the collection of SQL statements into the stats$sql_summary table.

SQL> SELECT * FROM stats$level_description ORDER BY snap_level;

Level 0 This level captures general statistics, including rollback segment, row cache, SGA, system events, background events, session events, system statistics, wait statistics, lock statistics, and Latch information.

Level 5 This level includes capturing high resource usage SQL Statements, along with all data captured by lower levels.

Level 6 This level includes capturing SQL plan and SQL plan usage information for high resource usage SQL Statements, along with all data captured by lower levels.

Level 7 This level captures segment level statistics, including logical and physical reads, row lock, itl and buffer busy waits, along with all data captured by lower levels.

Level 10 This level includes capturing Child Latch statistics, along with all data captured by lower levels.

You can change the default level of a snapshot with the statspack.snap function. The i_modify_parameter => 'true' changes the level permanent for all snapshots in the future.

SQL> exec statspack.snap(i_snap_level => 6, i_modify_parameter => 'true');

Create, View and Delete Snapshots

sqlplus perfstat/perfstat

SQL> exec statspack.snap;

SQL> select name,snap_id,to_char(snap_time,'DD.MM.YYYY:HH24:MI:SS')

"Date/Time" from stats$snapshot,v$database;

NAME SNAP_ID Date/Time

--------- ---------- -------------------

AKI1 4 14.11.2004:10:56:01

AKI1 1 13.11.2004:08:48:47

AKI1 2 13.11.2004:09:00:01

AKI1 3 13.11.2004:09:01:48

SQL> @?/rdbms/admin/sppurge;

Enter the Lower and Upper Snapshot ID

Create the Report

sqlplus perfstat/perfstat

SQL> @?/rdbms/admin/spreport.sql

Statspack at a Glance

What if you have this long STATSPACK report and you want to figure out if everything is running smoothly? Here, we will review what we look for in the report, section by section. We will use an actual STATSPACK report from our own Oracle 10g system.

Statspack Report Header

STATSPACK report for

DB Name DB Id Instance Inst Num Release RAC Host

------------ ----------- ------------ -------- ----------- --- ----------------

AKI1 2006521736 AKI1 1 10.1.0.2.0 NO akira

Snap Id Snap Time Sessions Curs/Sess Comment

--------- ------------------ -------- --------- -------------------

Begin Snap: 5 14-Nov-04 11:18:00 15 14.3

End Snap: 6 14-Nov-04 11:33:00 15 10.2

Elapsed: 15.00 (mins)

Cache Sizes (end)

~~~~~~~~~~~~~~~~~

Buffer Cache: 24M Std Block Size: 4K

Shared Pool Size: 764M Log Buffer: 1,000K

Note that this section may appear slightly different depending on your version of Oracle. For example, the Curs/Sess column, which shows the number of open cursors per session, is new with Oracle9i (an 8i Statspack report would not show this data).

Here, the item we are most interested in is the elapsed time. We want that to be large enough to be meaningful, but small enough to be relevant (15 to 30 minutes is OK). If we use longer times, we begin to lose the needle in the haystack.

Statspack Load Profile

Load Profile

~~~~~~~~~~~~ Per Second Per Transaction

--------------- ---------------

Redo size: 425,649.84 16,600,343.64

Logical reads: 1,679.69 65,508.00

Block changes: 2,546.17 99,300.45

Physical reads: 77.81 3,034.55

Physical writes: 78.35 3,055.64

User calls: 0.24 9.55

Parses: 2.90 113.00

Hard parses: 0.16 6.27

Sorts: 0.76 29.82

Logons: 0.01 0.36

Executes: 4.55 177.64

Transactions: 0.03

% Blocks changed per Read: 151.59 Recursive Call %: 99.56

Rollback per transaction %: 0.00 Rows per Sort: 65.61

Here, we are interested in a variety of things, but if we are looking at a "health check", three items are important:

The Hard parses (we want very few of them)

Executes (how many statements we are executing per second / transaction)

Transactions (how many transactions per second we process).

This gives an overall view of the load on the server. In this case, we are looking at a very good hard parse number and a fairly light system load (1 - 4 transactions per second is low).

Statspack Instance Efficiency Percentage

Next, we move onto the Instance Efficiency Percentages section, which includes perhaps the only ratios we look at in any detail:

Instance Efficiency Percentages (Target 100%)

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

Buffer Nowait %: 100.00 Redo NoWait %: 99.99

Buffer Hit %: 95.39 In-memory Sort %: 100.00

Library Hit %: 99.42 Soft Parse %: 94.45

Execute to Parse %: 36.39 Latch Hit %: 100.00

Parse CPU to Parse Elapsd %: 59.15 % Non-Parse CPU: 99.31

Shared Pool Statistics Begin End

------ ------

Memory Usage %: 10.28 10.45

% SQL with executions>1: 70.10 71.08

% Memory for SQL w/exec>1: 44.52 44.70